Methodology

This resource reviews the evidence available on interventions and provides a summary of the evidence, along with the supporting research that documents the efficacy and effectiveness of interventions designed to address the needs of women and girls.

The Complexities of Measuring What Works

The Complexities of Measuring What Works

The purpose of this resource is to gather the evidence of what programs have positive outcomes for women in HIV/AIDS prevention, treatment and care and to make the evidence accessible for use in developing policies and programs. This section explains the methodology used in What Works to identify the evidence. This methodology was reviewed and endorsed by experts in meetings in 2010 in Cape Town, South Africa (hosted by the Open Society Foundations' Public Health Program), and in 2011 in Washington, DC (hosted by the Office of the Global AIDS Coordinator). This work has a number of challenges, including the complexity of measuring what works, as well as choosing an appropriate system for identifying the strength of evidence in primary investigation and in programmatic delivery.

Understanding the epidemiology of HIV, how it is spread and who is at risk is critical for developing and evaluating successful interventions (Chin, 2007). Measuring "what works" is complex and difficult since the outcomes and impacts of interventions depend on a number of biological and proximate determinants in the etiological pathway (Boerma and Weir, 2005). The epidemiological concept of the reproductive number for HIV, R0[1], is a key concept in understanding the epidemic in any given population.

R0= Dc

Where: is the average probability of transmission per sexual contact,

D is the average duration of infectiousness, and

c is the average number of sexual contacts per unit time.

R0 "is the number of secondary cases which one case would produce in a completely susceptible population [which] depends on the duration of the infectious period, the probability of infecting a susceptible individual during one contact, and the number of new susceptible individuals contacted per unit of time" (Dietz, 1993: 23). Each of these parameters is subject to significant heterogeneity and context specific mediating variables that need to be estimated in order to fully understand HIV interventions in a community or population. Not surprisingly, the reproductive number for HIV varies considerably within and across countries and among groups within countries (epidemic heterogeneity). Furthermore, HIV prevalence reflects the cumulative burden of infections that have happened in the past taking into account deaths. Therefore, it is important that in as far as possible that programs and policies be based on HIV incidence, or the rate of new infections. A practical way to do this is to ask: "Where will the next 1,000 infections occur?" (Bertozzi et al., 2008: 833). Methods for answering this question for any community in a replicable manner use epidemiological modeling to estimate where new infections are most likely to occur in order to target our prevention interventions to those areas, including both geographic areas and populations. These modeling efforts are available and are being refined.

Additionally, given the difficulties of conducting cohort studies to estimate incidence, cross-sectional approaches, such as newer HIV assays that are being tested to distinguish recent HIV infections from chronic or longer-term HIV infections should help provide more near-term evidence (Kim et al., 2012) for both how to target interventions and how to measure impact. Regardless, policy and program responses to this question must be based on a clear understanding of the epidemiology of HIV, an understanding of the contexts in which women and girls live and the factors that make them vulnerable to HIV infection as well as clear evidence of what works. Approaches such as Know Your Epidemic; Know Your Response (KYE/KYR) incorporate epidemiology, social context, culture and cost into planning and targeting country and sub-national responses (WHO, 2011b). Operating in specific socioeconomic, cultural (including gender), and demographic settings, interventions, such as counseling and testing, must affect "proximate determinants" such as number of sexual partners, male and female condom use, blood safety practices, etc., which must act through biological determinants (exposure, efficiency of transmission per contact and duration of infectivity) to affect HIV transmission. "The distinction between underlying and proximate determinants is important for the conceptualization of pathways through which underlying determinants, including interventions, may affect infection" (Boerma and Weir, 2005: S64). Thus, when interventions are determined to work in this compendium of evidence, they have been shown to work through a causal pathway to affecting HIV or at least a proximate determinant, such as partner reduction or condom use. Yet, a word of caution is due unless biological outcomes are measured. For example, it is possible for an intervention to increase condom use but "the effect of such an increase would depend on the extent to which condoms were used during sexual contact between infected and susceptible partners" (Boerma and Weir, 2005: S66). Furthermore, it is important to note that treatment and care interventions aim for outcomes other than HIV transmission and acquisition. Therefore in the spectrum of HIV and AIDS-related interventions for women and girls, a range of outcomes is included. Interventions to affect the enabling environment increasingly referred to as structural interventions can take a long time to have an effect on HIV incidence (Hayes et al., 2010a; Auerbach et al., 2011). For example, where economic dependency results in women being less able to negotiate condom use, condom use might be the most appropriate outcome for a program that targets economic status of women. Implementation alone, however, isn't enough: it is important to implement interventions in gender-sensitive ways that respect the rights of women and men (Ogden et al., 2011).

The Challenge of Rating Evidence

A second challenge is determining the appropriateness of evidence and the strength of that evidence to inform policies and programming. A number of systems have been developed to rate the strength of evidence (Gray, 1997; West et al., 2002; Guyatt et al., 2008; Evans, 2003; Centre for Evidence Based Medicine, 2009; Liberati et al., 2009; Baral et al., 2012b). Early systems focused on evidence-based medicine while newer systems have broadened the focus to evidence-based public health (Gray, 2009). Clearly these designations are not mutually exclusive. Figure 1 illustrates the links between the two and the rationale for distinguishing between clinical interventions for individual patients and public health programming for populations and improving health and other services.

This distinction, while intuitive, has led to a passionate debate about what types of studies provide legitimate evidence, particularly for public health interventions. The complexity of HIV and the wide range of programing needed to address it further compound the debate, which centers particularly on the need for randomized control trials, considered the "gold standard" for evidence-based medicine, as compared to other study designs. For some, the distinction is between studies that have a clear counterfactual and those that do not. Writing about complex prevention interventions, Padian et al. notes that, "Clearly, evaluation design is not a one-size-fits-all endeavor. Combination programs should be evaluated with the most rigorous methodological designs possible. Evaluators must determine the most appropriate method based on their level of control over program implementation, the resources, feasibility, and political will for a prospective or randomized design, and the availability and quality of existing data" (Padian et al., 2011c: e27).

While randomized controlled trials are the pinnacle for evidence-based medicine, in the global public health community there is growing recognition that a variety of research designs are needed to help evaluate actual programs (Yamey and Feachem, 2011). Hayes et al. note that "interventions that target determinants at the microbiological and cellular levels, if effective and feasible, are more likely to have more rapid and consistent effects that are easier to demonstrate in randomized controlled trials (RTCs) than interventions that operate at more distal points... functioning through multiple mediating factors" (Hayes et al., 2010a: S83). Furthermore, "altering the norms and behaviors of social groups can sometimes take considerable time... (Global HIV Prevention Working Group, 2008: 12). Jewkes observes that medically-oriented "clinical trials are inherently unsuited to evaluating complex interventions" (Jewkes, 2010a: 146).

While randomized controlled trials are the pinnacle for evidence-based medicine, in the global public health community there is growing recognition that a variety of research designs are needed to help evaluate actual programs (Yamey and Feachem, 2011). Hayes et al. note that "interventions that target determinants at the microbiological and cellular levels, if effective and feasible, are more likely to have more rapid and consistent effects that are easier to demonstrate in randomized controlled trials (RTCs) than interventions that operate at more distal points... functioning through multiple mediating factors" (Hayes et al., 2010a: S83). Furthermore, "altering the norms and behaviors of social groups can sometimes take considerable time... (Global HIV Prevention Working Group, 2008: 12). Jewkes observes that medically-oriented "clinical trials are inherently unsuited to evaluating complex interventions" (Jewkes, 2010a: 146).

The AIDS2031 Consortium argues that because HIV incidence is the desired endpoint and the long time frame needed for HIV interventions, time limited clinical trials are often not useful to generate the evidence base to combat the AIDS pandemic (AIDS2031 Consortium, 2010). Others have recommended that where relatively small-scale, tightly controlled trials show evidence for efficacy, they should be followed by effectiveness trials to demonstrate 'real-world' relevance and inform scale-up. Where effectiveness trials have been conducted, research should focus on replication and adaptation for other settings (WHO, 2010f). In addition, as others have noted, different study designs and data sources are important to evaluate the process and impact of public health interventions (Kimber et al., 2010). For example, qualitative data can provide complementary evidence to effectiveness studies. Randomization should not be equated with a clinical trial designed to evaluate a clinical intervention, but can be done with a variety of methodologies.

Padian et al. call for use of implementation science (Padian et al., 2011a), which is "the scientific study of methods to promote the integration of research findings and evidence-based interventions into health care policy and practice and hence to improve the quality and effectiveness of health services and care" (Schackman, 2010: S28). Kimber et al. recommends that evaluations 1) include both behavioral and biological outcomes and are powered sufficiently; 2) where possible, conducted with community-level randomization; 3) compare additional interventions or increased coverage and intensity of interventions with current availability; and, 4) evaluate complete packages instead of single interventions for example, evaluating the impact of harm reduction as compared to needle exchange (Kimber et al., 2010). However, what is in the published literature does not always do all of the above, but can still be used to guide programming.

Categorizing Strength of Evidence in What Works

Given the breadth of interventions related to HIV programming, which range from clinical treatment and structural interventions, no one system for rating the strength of evidence is perfect. Some examples of rating systems follow. GRADE (Grading of Recommendations Assessment, Development and Evaluation) has been endorsed by WHO for clinical interventions and development of guidelines. It rates evidence as high, medium, low, and very low and couples those with levels of uncertainty in effects, values and preferences and with use of resources (Guyatt et al., 2008; Lewin et al., 2012). SORT (Strength of Recommendation Taxonomy) sorts evidence by quality, quantity and consistency of evidence, into levels 1, 2 and 3 (Ebell et al., 2004). The Center for Evidence Based Medicine (CEBM) categorization lists interventions as beneficial, likely to be beneficial, likely to be ineffective and unknown based on the evidence available (www.cebm.net). A newer system, HASTE, which stands for the Highest Available Standard of Evidence, was developed to evaluate MSM programs. HASTE evaluates interventions based on efficacy data, implementation science data, and plausibility (Baral et al., 2012b). HASTE has potential for wider use to determine evaluate HIV programming.

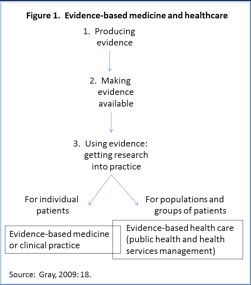

For this resource, the strength of evidence is categorized using a modification of the five levels identified by Gray (Gray, 1997), shown in Table 1. This rating scheme, labeled the "Gray Scale" in What Works, was originally identified for use in developing the Cochrane Collection of Systematic Reviews of evidence-based medicine (http://www.cochrane.org/about-us/evidence-based-health-care). An early version of What Works adopted the Gray Scale and subsequent expert meetings (in Cape Town in 2010 and in Washington, DC in 2011) have endorsed maintaining this classification of the strength of evidence for What Works, with one modification. A 2009 edition of Grays work expands to include evidence-based health care (e.g. public health programming) in addition to evidence-based medicine. In the 2009 edition, Gray describes the same types of evidence that related to the five levels of evidence.

For this version of What Works, participants at the expert methodology meeting in Washington, DC in 2011 determined that dividing the Gray III category into studies that included a control group (IIIa) and those that did not (IIIb) would provide additional information for classifying the evidence supporting interventions.

|

Table 1. Gray Scale of the Strength of Evidence |

|

|

Type |

Strength of evidence |

|

I |

Strong evidence from at least one systematic review of multiple well designed, randomized controlled trials. |

|

II |

Strong evidence from at least one properly designed, randomized controlled trial of appropriate size. |

|

IIIa |

Evidence from well-designed trials/studies without randomization that include a control group (e.g. quasi-experimental, matched case-control studies, pre-post with control group) |

|

IIIb |

Evidence from well-designed trials/studies without randomization that do not include a control group (e.g. single group pre-post, cohort, time series/interrupted time series) |

|

IV |

Evidence from well-designed, non-experimental studies from more than one center or research group. |

|

V |

Opinions of respected authorities, based on clinical evidence, descriptive studies or reports of expert committees. |

Note: Gray includes five types of evidence (Gray, 1997). For What Works, level III has been subdivided to differentiate between studies and evaluations whose design includes control groups (IIIa) and those that do not (IIIb). Qualitative studies can fall in both levels IV and V, depending on number of study participants among other factors. For more detail about these types of studies and their strengths and weaknesses (Gray, 2009).

Identifying What Works vs. Promising

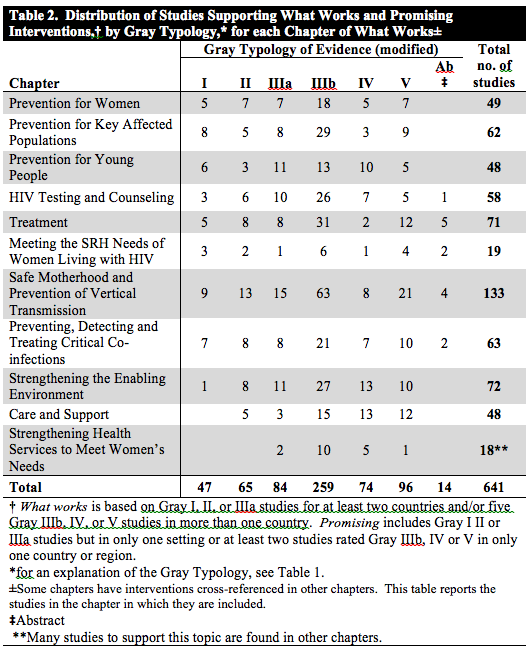

What Works incorporates two other dimensions to its methodology the depth of evidence (how many studies support the intervention), and the breadth of evidence (how many countries contribute evidence to support the intervention. In cases where a majority of the evidence, and particularly strong evidence, exists for an intervention, this was listed in each section as "what works." Criteria set by the expert review committee for "what works" and "promising" were:

What Works: Gray I, II or IIIa studies for at least two countries and/or five Gray IIIb, IV or V studies across more than one country.

Promising: Gray I, II or IIIa studies but in only one setting or at least two studies rated Gray IIIb, IV or V in only one country or region.

Much of the evidence cited in this document falls in strength levels IV and V; however, many studies fall in strength level IIIa and IIIb, with growing numbers of systematic reviews (level I) and randomized control trials (level II). Not all of the interventions listed here have the same weight and those that are promising but require further evaluation are identified.Within each intervention, studies are listed in order of Gray scale, with the strongest studies first (Gray I, II, IIIa, IIIb, etc.) and abstracts last.

When an intervention had both positive outcomes and negative outcomes, this was noted. For example: microcredit can reduce HIV-related risk behaviors (Pronyk et al., 2008a), but it could also increase violence against women if the intervention is not carefully designed and appropriate to local context (Schuler et al., 1998; Gupta et al., 2008a; Nyamayemombe et al., 2010). Likewise, where findings conflict or some studies have shown positive outcomes and other studies have shown negative outcomes, we have noted this. Finally, discussions about "what does not work," are included in the in the overview sections in each topic. Evidence of a problemsuch as the prevalence of violence against womenis described in the introduction to each section.

In the course of reviewing the literature to generate "what works," a number of gaps emerged from the literature and these were noted. Evidence of a gap is not exhaustive but illustrative, providing a few examples. No search mechanism was possible to generate gaps.

Gaps: programs that emerge from the literature as needing to be implemented to meet womens needs related to the HIV/AIDS pandemic but without evidence showing what intervention would address this need.

No attempt has been made, as is done in the Cochrane Collaboration, to reanalyze the data on interventions. With review articles, the original studies are cited as reported in the review. An attempt has been made to use the original studies and primary sources; but in the few cases where the original could not be located, the authors relied on review articles. Evidence from review articles is noted (e.g., x cited in y).

When possible, measures such as a decrease in HIV incidence rates or a decrease in rates of other STIs are used as evidence. If these measures are not available, evidence is drawn from studies measuring externally verifiable measures such as service utilization or, least reliably, self-reported behavior changes such as condom use, monogamy, sexual abstinence and a decrease in number of sex partners. Though for many of these, external verification is not feasible and self-reported behavior is the best available evidence.

Where possible, we have included sex-disaggregated data. Where an intervention is relevant for both men and women, but does not have sex-disaggregated data, it is identified if included. For the chapters that are heavily medical interventions, such as those related to treatment and co-infection, for which many interventions apply to both men and women, only interventions that apply to women are included in the compendium. This does not imply that interventions that work for both men and for women are not important but rather the focus on this compendium of evidence on interventions that are distinctive for women. Furthermore, interventions for men that also benefit women (e.g. interventions that improve the health of men and thus reduce the burden of women caring for male family members) are included in this compendium.

Evidence on the Costs of Interventions

Where available, we have included studies on costs of different interventions. However, there are numerous interventions for which cost effectiveness studies are still not available or are insufficient, especially most structural interventions. [See Strengthening the Enabling Environment] "The sparse cost effectiveness data is not easily comparable; thus, not very useful for decision making. More than 25 years into the AIDS epidemic and billions of dollars of spending later, there is still much work to be done both on costs and effectiveness to adequately inform HIV prevention planning" (Galrraga et al., 2009: 1).

How Evidence to Include in the Compendium was Identified

This resource contains research published in peer-reviewed publications and study reports with clear and transparent data on the effectiveness of various interventions for women and girls, program and policy initiatives that can be implemented to reduce prevalence and incidence of HIV and AIDS in low and middle income countries. Basic information, as well as policy issues concerning treatment and care for HIV and AIDS is also included. Biomedical considerations (for example, the fact that use of nevirapine to prevent vertical transmission may lead to drug resistance once a woman accesses antiretroviral therapy for her own health needs) are included in so far as it is relevant to programmatic considerations. Links are provided to WHO and other guidance for which combinations of drug regimens are recommended but are not considered in this website. Most evidence in the compendium comes from developing countries; however, where that was not available, evidence from high-income countries is included. Articles in English, Spanish and French were reviewed. However, the vast majority of the literature was in English.

Search Methodology

To search for relevant interventions that had been evaluated, searches were conducted using SCOPUS[2], Medline and Popline, using the search words HIV or AIDS and wom*n. Additional topics were researched using "syphilis and HIV;" "gender and HIV;" "malaria and HIV;" and "breastfeeding and HIV." For the years 2005 to 2009, a total of 7,744 citations were generated. Of these citations, approximately 2,500 articles were reviewed in full. For the years Jan. 1, 2009 until Dec. 31, 2011, a total of 3,058 citations were generated. Of these citations, approximately 1,500 were excluded based on title examination and 750 were excluded based on article review. Approximately 1,250 articles were reviewed in detail.

If the article title indicated that there might be an intervention that could be replicated; then the article was obtained and reviewed. The lead author read and reviewed all the articles obtained and determined if there was sufficient information to be included in What Works and that the intervention took place in low- or middle- income countries in Asia, Africa, Latin America and the post-Soviet states. Key interventions that had taken place only in high-income countries (namely the United States, Europe, Japan and Australia) were included only if the authors determined that the study could be relevant for developing country contexts but had not yet been initiated in developing countries.

The lead author reviewed all titles and made the first determination about potential suitability for inclusion. The lead author also reviewed the article prior to detailed review to further determine eligibility. If studies met the criteria that they included an intervention which had an outcome and had been evaluated for effectiveness, a member of the What Works team [See Acknowledgements] read and wrote up the intervention in a standard format: study year, country where the study took place; the numbers included in the study (N); study design; the intervention; the outcome and the country where the study was conducted. The main co-authors reviewed and concurred on Gray scale ranking for each study according to the study's methodology.

A few studies, identified through a POPLINE search prior to 2005, have been included and only when more recent data are not available. Studies prior to 2005 were included if they were key articles with more robust methodologies and data than studies in more recent years; or more recent data did not exist. For example, all the studies on treatment options for occupational exposure to HIV were done in the 1990s, so these were included.

In addition, the authors searched the gray literature by reviewing documents from some key websites. Key websites reviewed included: UN agencies, UNAIDS, World Health Organization (WHO), The Cochrane Collaboration; OSI; ICRW; Futures Group; Population Services International (PSI); The Population Council; ICW; World Bank; Family Health International (FHI); AIDStar I and II, and the Guttmacher Institute. Abstracts from CROI, held in March 2012, were reviewed by the lead author for inclusion. Abstracts from CROI were considered important, as this would provide the most recent scientific advances that would not yet be in the published literature.

Interventions were determined by the themes that emerged from study write-ups. The authors shaped those themes into an intervention point. These are the numbered interventions in each chapter. It is important to note that these interventions emerged organically from the evidence; the authors did not select interventions and look for supporting data but rather the interventions emerged from the evidence.

Experts were consulted during the writing of the document. [See Acknowledgements] A number of these experts provided review comments that were incorporated into the final compendium. A review meeting was held in Cape Town, South Africa, February 17-19, 2010. For the review process for 2012, reviewers were emailed section with questions to focus their review and provided comments in memos, track changes and via telephone conference calls. Forthcoming material, including interventions in progress, was not included, as there was no systematic way to include such material.

Limitations

One limitation of the methodology used is that the search methodology focused on health literature; therefore it may have missed other relevant literature, such as education and law. For example, increased education for girls is associated with reduced risks of HIV acquisition, yet the search did not include program interventions to keep girls in school that would have shown up in the education literature rather than the public health literature. However, in 2014, a full review of the legal literature was carried out and that section was updated.

It should be noted that there are likely many valuable interventions that have not been evaluated and/or published in the public peer reviewed literature. Important websites may have been unintentionally missed. Additionally, it is clear that faith-based organizations (FBO) and community-based organization (CBO) have played a major role in responding to the AIDS pandemic and they are responsible for a significant proportion of treatment, in addition to care and support, including spiritual support. The literature reviewed for this compendium of evidence did not yield many studies based on programs implemented by FBOs and CBOs that met the criteria for inclusion in this document. Given the central role of these organizations within communities, this is likely a significant gap in the evidence base.

Furthermore, while the authors attempted to undertake a systematic review of the evidence, and to get input from expert reviewers, some important interventions may be inadvertently omitted. Finally, many HIV prevention programs that address key issues in novel, context-specific ways are often not rigorously evaluated (Gupta et al., 2008a). This document should be viewed as a living document; to be updated as new information is available.

In all, the evidence for What Works and Promising interventions includes 641 studies.

[1] "When, on average, one infected person infects more than one other person, R0 is greater than () 1 and the result will be epidemic spread of an agent [HIV]. However, when, on average, one infected person does not infect more than one other person, R0 is less than () 1 and epidemic spread does not occur. When R0 is 1, the infectious agent will slowly disappear and if R0 stays close to 1 the agent will maintain itself in the population with no or minimal growth (i.e., becomes endemic)" (Chin, 2007: 60).

[2]Scopus is the largest abstract and citation database of peer-reviewed literature and quality web sources with smart tools to track, analyze and visualize research (http://info.scopus.com/scopus-in-detail/facts/)